- November 8, 2023

- vastadmin

- 0

Introduction

In today’s bustling intersection of Development and Operations, efficiency and automation are not merely buzzwords With the fusion of Docker in DevOps, the tech world has become synonymous with efficiency, portability and scalability. In this blog post, we’ll talk about the importance of Docker in DevOps and how a typical organization can learn how Docker works. You’ll also learn how to install docker and how to run docker containers. Let’s dig in!

What is DevOps?

DevOps is a collaboration between the Development (Dev) and Operations (Ops) teams. It helps to automate the repeated tasks. It eases the maintenance of existing applications and deployments.

It is a cultural practice that helps to improve communication between Development and Operation teams and makes the collaboration smoother. This reduces the time to market by eliminating the time taken to communicate within the teams. This gives the organizations a competitive advantage as they can automate repetitive tasks and focus on other important tasks. By applying the best strategy for implementing DevOps, you can achieve higher efficiency and seamless deployment.

Continue reading What is DevOps→

What is Docker?

Docker is an open-source containerisation tool that packages your application and its dependencies together. This ensures that your application works in any given environment seamlessly. It also helps to orchestrate and manage containerization applications, allowing users to easily deploy and manage their applications.

Docker is a famous Platform As A Service (PaaS) system. It virtualizes at the operating system level and delivers applications in small packages that are called “containers”. It is true that containers existed before Docker. But Docker made it easier for users to manage their applications with the help of containers.

What are virtual machines

A virtual machine is a simulation of a personal computer. It consists of all the necessary elements present in a normal computer like CPU, memory, network interface and storage and functions like a physical computer. Special software, hardware or both are needed while setting up the virtual machine.

What are Docker containers?

A Docker Container is a lightweight, standalone package of coding that packages up the new code that the developer is writing and all its dependencies. It contains all the necessary components for running the software: Code runtime Libraries Environment variables Config files Docker containers help to transport the software from one environment to another.

Advantages of Docker

A Docker is tool that helps you to package your applications and its dependencies together. Here are the advantages of Docker:

- Cost-efficient and large savings

- High ROI

- Agile Deployment

- Easy to maintain

- Continuous deployment and Continuous testing

- Less storage required

- Easy portability

- Quick and easy configurations

- Productivity and standardisation

- Isolation, security and segregation

How Docker works

Docker provides a standard way to run the code. Understanding how Docker works can be pretty easy. It acts as an operating system for the containers. It works similarly to a virtual machine, virtualizes server hardware, container virtualises the operating system of a server. Thus, Docker is installed on each server and provides commands used to build, start and stop containers.

Difference between Docker and Virtual Machine

People often mix up the difference between Docker and Virtual Machine. We need to understand these two distinct things. Here’s the difference between Docker and Virtual Machine:

-

- Definition: Docker is a group of processes that a shared kernel manages. Whereas, a virtual machine has an operating system that shares the host hardware through hypervision.

- Image sizes: The image sizes in docker are measured in MBs. In the virtual machine, it is measured in GBs.

- Boost-up Speed: In Docker the boost-up speed is faster compared to the virtual machines.

- Efficiency: A Docker is more efficient than a Virtual machine

- Scaling: The Docker can easily scale the applications. But virtual machines face difficulties in scaling the applications

- Space Allocation: A Docker tool shares and reuses data volumes among various Docker containers. Whereas, Virtual Machines cannot share data volumes with other virtual machines.

- Portability: Docker is easily portable. Virtual Machines may face compatibility issues across different platforms.

- Availability: Additionally, Docker is an open-source platform. But Virtual machine is available in both- paid and open-source (free) software.

When to use Docker

Docker can be used as a core building block while creating modern applications and platforms. Developers first need to identify their problems and analysis when to use Docker. Building and running microservices and architectures, and deployment of code with standardized continuous integration and delivery pipelines can be done easily with Docker. This helps you to build highly scalable data processing systems and create a fully managed environment.

- Microservices: When you want to build and scale applications by leveraging the standardized code deployments.

- Continuous Integration and Delivery: When you want to accelerate delivery of applications. Docker helps to accelerate the process by standardizing the environments and removing conflicts between stacks and versions.

- Data Processing: When you are dealing with big data, Docker processes package data and analytic packages into portable containers that can be done even by a non-techy person.

What is Docker in DevOps

Docker is the perfect platform for the DevOps environment. It is the perfect fit for organizations that cannot keep up with the changing technology, business or customer requirements. It scales up and fastens the operations in the company. Additionally, Docker is known for its ability to containerize applications. It reduces the time of development and releases a solution for web development company.

Docker in DevOps can also help overcome the challenges of Development and Operations. It allows the applications to continuously run in any environment without worrying about the host configurations. Docker in DevOps allows to streamline and handle the varying changes throughout the development cycle. Also, Docker allows you to go to the previous versions of applications as well.

Suitability of Docker in DevOps

Docker is a tool that is beneficial for both developers and administration. Thus being a part of many DevOps toolchains. Developers can concentrate on coding without fretting over the eventual deployment system, thanks to Docker’s open-source environment. They have the liberty to utilize pre-built applications designed by others to operate seamlessly in Docker containers.

Additionally, Docker in DevOps gives operations staff the flexibility to test the code in any environment. Since the Docker files are small, it reduces the cost of storage and maintenance.

Apart from this, Docker in DevOps can also be helpful in AWS configuration. The role of AWS in DevOps is quite significant as it is highly scalable and secure. AWS is an addition while doing programming. If you wish to build a comprehensive system, you can utilize declarative-style AWS CloudFormation templates.

What are the benefits of using Docker in DevOps

Docker in DevOps can be game-changing. It helps to collaborate between the teams. Here are some benefits of Docker in DevOps

- Docker in DevOps helps to create applications using different interconnected components.

- Docker with DevOps also gives a high level of control during changing environments. It also lets you go back to the previous version of the application.

- It helps the application to run in different environments.

- You may face challenges in DevOps implementation, Docker in DevOps will help to ease up your process

How to install Docker

For the latest updates, you should always refer to official documentation.

Docker can be installed on Linux distributions using the package manager, for instance, on Ubuntu you might use ‘sudo apt install docker.io’ to install Docker directly from the official repository. In Linux, Docker commands often require sudo to grant administrative privileges. However, for simplicity, we’ll omit sudo from Docker commands in this discussion.

To run Docker on macOS or Windows, since the Docker daemon relies on Linux-specific features, you would use Docker Desktop, which provides a native application using a lightweight virtual machine (VM). Docker Desktop includes Docker Engine, Docker CLI client, Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper. The official installation guide for Docker Desktop is the best resource for installing Docker on these non-Linux platforms.

Using the Docker

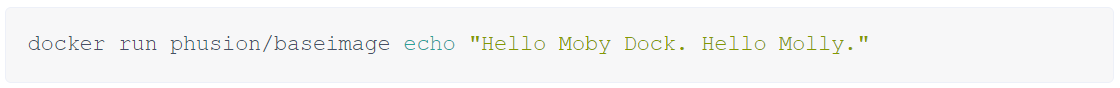

Lets start with a quick example:

You would see the output as:

Docker checks for the phusion/baseimage on your local machine. If it’s not found locally, Docker downloads it from the Docker Hub:

- Docker then creates a new container from that image.

- Within this newly created container, it runs the echo “Hello Moby Dock. Hello Molly.” command.

- After the command has been carried out, the command has finished running and the container stops.

During the first execution, you experience a delay if the image is not been cached, the image needs to be downloaded from Docker Hub. Once the image is cached locally, subsequent starts will be significantly quicker since Docker can use the local version.

To check the details of the container that was just run, the Docker ps -l command can be used, which will provide information about the last container that was started or created.

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

af14bec37930 phusion/baseimage:latest "echo 'Hello Moby Do 2 minutes ago Exited (0) 3 seconds ago stoic_bardeen Running the Docker file

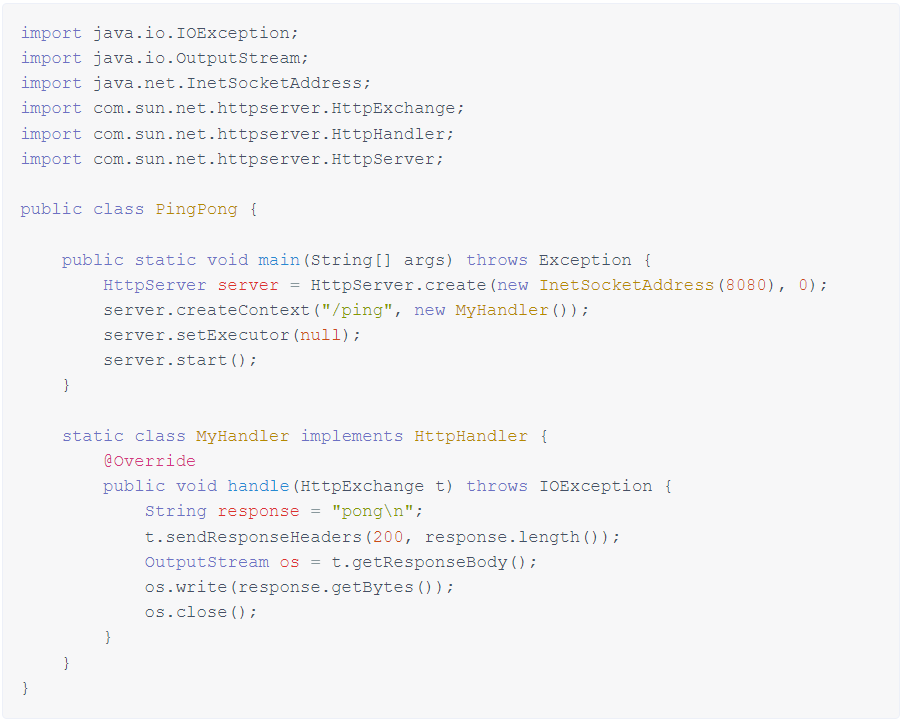

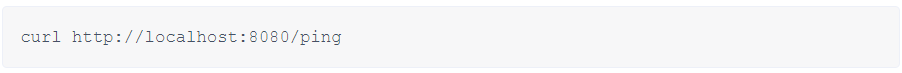

Running commands in Docker can be as easy as executing them in a normal command line interface. To demonstrate a practical scenario, we will explore using Docker to set up a basic web server. The server will be a Java application that responds to HTTP GET requests at the ‘/ping’ endpoint with the response ‘pong\n’.

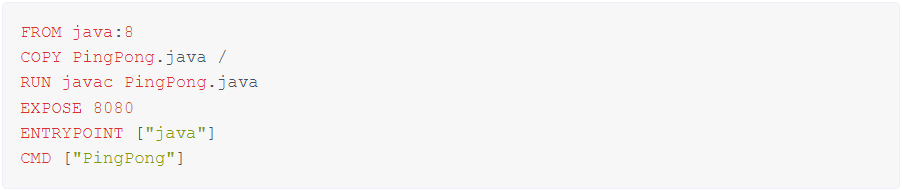

Docker File

Before creating a Docker image, it’s wise to search Docker Hub or other accessible private repositories for an existing image. For instance, you can utilize the official java:8 image instead of manually installing Java.

To construct a Docker image:

- FROM: Select a base image, indicated by the FROM In this context, it’s the official Java 8 image from Docker Hub.

- COPY: Transfer your Java file into the image using the COPY

- RUN: Compile the Java file within the image using the RUN

- EXPOSE: Communicate which port will be used by the container with the EXPOSE

- ENTRYPOINT: Define the default executable for when the container starts with ENTRYPOINT.

- CMD: Specify the default arguments to the ENTRYPOINT with CMD.

These Dockerfile instructions will compile your Java and prepare it to execute in a Docker container, making sure that the application is available on the port you specified when the container is launched.

Once you’ve written these instructions into a file named “Dockerfile,” you can construct the Docker image by running the appropriate build command.

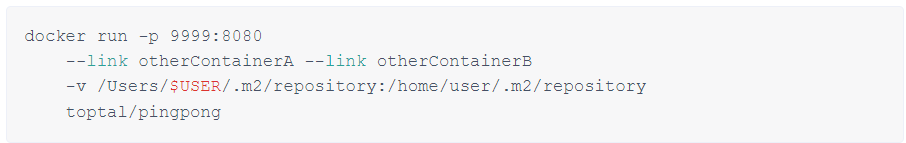

Running the Container

After the image is built, you can initiate it as a container. There are multiple methods for starting containers, but let’s proceed with a basic approach.

The -p option specifies the port mapping between the host and the container, with the format [host-port]:[container-port]. We command the Docker to run the container in detached mode, which means it operated in the background. This can be don’t by including the -d flag.

On Linux:

On platforms requiring Boot2Docker:

If everything is right, you should see the response:

Hurray, your custom Docker container is up and running! The container can also be launched in interactive mode using the -i and -t flags. By doing so and overriding the ENTRYPOINT, you’ll get a bash shell within the container. This allows you to execute any commands as needed, but remember, closing the shell will also stop the container.

Docker Container Setup with Persistent Volumes and Links

Docker provides a variety of options for configuring container startup. You can mount a directory from the host to the container using the -v flag, in order to maintain data persistently even after the container stops. The mount volumes are default set as read-write but can be changed to read-only by adding :ro to the end of the container’s volume path. These volumes can be extremely beneficial for sensitive data such as credentials and private keys that should not be included in the image and also to avoid duplication of data. For instance, you can map your local Maven repository to the container, which prevents re-downloading dependencies.

Furthermore, Docker can link containers, allowing communication without exposing ports, utilizing the –link option followed by the name of another container. Here is an illustrative command incorporating these features:

docker run -d -v /path/on/host:/path/in/container:ro –link other-container-name my-image

This command starts a container in detached mode with a read-only volume mounted from the host and establishes a link to another running container.

Other Container and image operations

There is a vast array of commands available for managing containers and images in Docker. Let’s briefly review some of the key ones:

- stop: Halts a container that is currently running.

- start: Reactivates a container that has been stopped.

- commit: Captures the current state of a container and saves it as a new image.

- rm: Deletes one or more containers.

- rmi: Erases one or more images from the system.

- ps: Displays a list of all containers.

- images: Shows all the images available locally.

- exec: Executes a specific command within an active container.

The exec command is notably useful for troubleshooting, as it allows you to open a terminal session inside an operating container for direct interaction:

‘docker exec -i -t <container-id> bash’

If you feel installing Docker can be a big task or it is something you are not confident doing, you always consult the best DevOps consulting in Toronto.

Conclusion

As we come to the end of the exploration of Docker in DevOps, it is obvious that Docker just not only complements DevOps but also enhances its working. Docker in DevOps has redefined the paradigms of software development, delivery and deployment it ensures the seamless deployment of the software and reduces the time to market. Embracing Docker in DevOps pipelines translates a scalable and system-agnostic development process empowering teams to achieve higher efficiency and reduced time to market. As the landscape of software develops, Docker in DevOps will undoubtedly remain a key player, driving forward excellence and innovation.

If you need help installing Docker for your system, click here to learn more!